The quest for faster, more efficient computing often leads us down complex technological rabbit holes, but few areas are as foundational as memory. If you've been tracking the relentless pace of innovation, you've likely heard the buzz around DDR5 – the latest iteration of Double Data Rate synchronous dynamic random-access memory. What started as an evolutionary leap in 2020 has rapidly matured, positioning itself as the undisputed champion shaping The Future of Memory: DDR5 Market Trends and Beyond. This isn't just a marginal upgrade; it's a multi-year roadmap promising staggering performance, unprecedented capacities, and a fundamental shift in how both everyday users and massive data centers interact with their systems.

DDR5 isn't merely bridging the gap to some distant future; it is the future, with significant development headroom still untapped. From the next-gen gaming rig you’re dreaming of to the sprawling, AI-powered server farms crunching petabytes of data, DDR5’s ongoing evolution is orchestrating a quiet revolution.

At a Glance: What You Need to Know About DDR5's Future

- Long-Term Dominance: DDR5 is here to stay, with DDR6 not expected on consumer platforms until late 2029 or 2030, and even later for data centers.

- Constant Evolution: JEDEC, the memory standards body, regularly updates specifications, with a 2024 revision enhancing performance and adding crucial anti-rowhammer protection.

- Process Shrinks Drive Gains: Leading memory makers (Samsung, Micron, SK hynix) are using advanced manufacturing nodes (1γ/1c, 1δ/1d) to dramatically improve speed, power efficiency, and capacity.

- Blazing Speeds: Expect mainstream DDR5 to reach 9200 MT/s, with enthusiast modules hitting 9600 MT/s and future platforms pushing past 10,000 MT/s.

- Massive Capacities: New process technologies enable larger individual memory chips (32 Gb, 48 Gb, 64 Gb), leading to staggering module capacities (up to 256 GB and beyond).

- Specialized Solutions: Clock Unbuffered DIMMs (CUDIMMs) for client PCs and Multiplexed Rank Dual Inline Memory Modules (MRDIMMs) for data centers are key innovations for extreme performance and scalability.

- Data Center Powerhouse: 2nd Gen MRDIMMs will deliver unparalleled bandwidth (up to 1.6 TB/s per CPU) for next-gen servers.

The DDR5 Era: A Marathon, Not a Sprint

When DDR5 first arrived on the scene, its initial performance gains over high-end DDR4 felt incremental to some. Yet, those early days merely hinted at the potential. Unlike previous memory generations that saw relatively quick turnovers, DDR5 is set for a long reign. Think of it less as a sprint to the next standard and more as a marathon of continuous improvement, where each new manufacturing node and specification update unlocks more of its inherent power.

The JEDEC standard, the very blueprint for how DDR5 operates, is a living document. Its 2024 update, for instance, didn't just tweak performance metrics; it incorporated an essential anti-rowhammer feature. This might sound like technical jargon, but it's a critical security and stability enhancement, protecting against potential data corruption by rapidly accessing adjacent memory rows. This continuous refinement ensures DDR5 remains robust, secure, and future-proofed for years to come.

The Engine Room: How Process Technology Fuels DDR5's Ascent

At the heart of DDR5's evolution lies the relentless innovation in manufacturing process technology. Just like CPU or GPU manufacturers shrink their transistors, memory makers are constantly refining their DRAM fabrication processes to achieve better performance, lower power consumption, and smaller chip sizes – what the industry calls PPA (Power, Performance, Area).

Leading the charge are the memory giants: Samsung, Micron, and SK hynix. These companies have journeyed from 17-19nm nodes in the mid-2010s to astonishingly compact ~12nm today. Samsung’s 14nm (D1α) technology was instrumental in producing the first 24 Gb DDR5-7200 device in late 2021, a significant milestone.

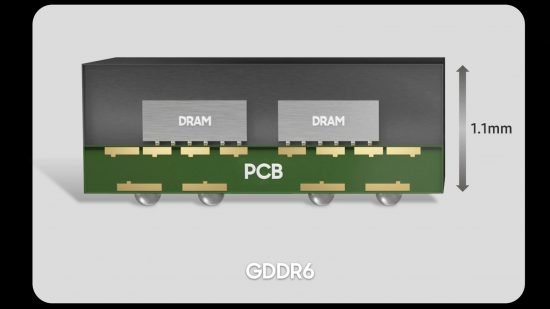

Today, Micron and SK hynix have unveiled their 10nm-class 1γ/1c nodes, while Samsung counters with its D1β (12.5nm), a node even smaller than its direct competitors (Micron G5 13nm, SK hynix 12.65nm). These microscopic improvements aren't just for bragging rights. The 1γ/1c process, in particular, is a game-changer. It's the cornerstone enabling the next wave of high-capacity, ultra-high-performance memory across various segments, including not just DDR5, but also HBM4 (for AI accelerators), GDDR7 (for graphics cards), and LPDDR5X (for mobile devices).

Looking further out, the upcoming 1δ/1d production node, anticipated in the second half of 2026, represents the final iteration of the 10nm-class nodes using the traditional 6F2 cell design. The real breakthrough comes in late 2027-2028 with the arrival of sub-10nm nodes, potentially led by Samsung. These next-gen nodes will introduce a revolutionary 4F2 cell structure, a fundamental architectural shift that promises even greater density and efficiency, paving the way for truly unprecedented memory capabilities.

Breaking Speed Barriers: From Enthusiast Rigs to Enterprise Beasts

The beauty of DDR5 lies in its incredible scalability. Whether you're a gamer, a professional content creator, or managing a vast server farm, there's a DDR5 solution being engineered to meet your demands for speed.

Mainstream & Enthusiast Gains

The JEDEC JESC79-JC5 specification defines DDR5-8800 as the new baseline for high-performance chips, with CL62 for premium options and CL78 for more budget-friendly ones, all while maintaining a consistent absolute latency of around 14 nanoseconds.

But the actual products are pushing even further. Micron envisions its 1γ/1c process DRAMs reaching up to 9200 MT/s, a blistering pace. SK hynix is hot on its heels, preparing 9200 MT/s memory devices with capacities up to 32 Gb. For those building bleeding-edge gaming PCs or high-end workstations, brands like Corsair, G.Skill, and V-Color are already expected to deliver enthusiast-grade modules hitting 9600 MT/s. We're truly seeing speeds that were science fiction just a few years ago.

The 10,000 MT/s+ Club: Beyond Raw Speed

The pursuit of speed doesn't stop at 9600 MT/s. The next frontier will break the 10,000 MT/s barrier. Intel’s Arrow Lake-S Refresh CPUs, expected early next year, are anticipated to usher in DDR5-10,000 modules, swiftly followed by DDR5-10,700.

Achieving these ultra-high data rates requires more than just faster memory chips; it demands robust signal integrity. This is where Clock Unbuffered DIMMs (CUDIMMs) come into play. These modules integrate a dedicated clock driver (CKD) to ensure stable and clean signals at extreme speeds. Currently, Intel platforms are the primary adopters of CUDIMMs.

AMD, however, isn't far behind. They're slated to introduce CUDIMM and CSODIMM (SODIMMs with CKD for laptops) support in 2026. This will start with their Granite Ridge Refresh processors, targeting up to 10,000 MT/s, and extend to their Zen 6 microarchitecture (e.g., Medusa Point) for speeds of 10,700 MT/s or even higher. It’s clear that both major CPU players are committed to pushing the limits of client memory performance.

Interestingly, while chasing peak clock speeds, AMD is also focused on absolute latency for consumer platforms. They're targeting DDR5-6400 at an incredibly low CL26, which translates to an 8 nanosecond absolute latency. This is a crucial distinction: raw clock speed is impressive, but for responsiveness in gaming or real-time applications, low latency often provides a more tangible benefit. Understanding this balance is key when you explore the 5th generation RAM options available today.

Capacity Kings: Scaling Memory for Tomorrow's Demands

Beyond speed, raw memory capacity is equally vital, especially as applications become more memory-intensive and data sets grow exponentially. New production technologies are pivotal in enabling larger individual memory integrated circuits (ICs), which in turn lead to much higher module capacities.

The initial 1α/1a processes allowed for the creation of 24 Gb DDR5 devices, which translated into practical module sizes like 24 GB, 48 GB, and even 96 GB. This was a significant jump from the typical 8 GB or 16 GB DDR4 modules most users were accustomed to.

The current 1β/1b and 1γ/1c nodes push this even further, enabling 32 Gb DDR5 ICs. These denser chips are the foundation for unbuffered DIMMs and CUDIMMs with capacities of 64 GB, 128 GB, and a staggering 256 GB. Imagine a workstation or even a high-end desktop with 256 GB or 512 GB of RAM – that's the kind of future these advancements are making possible.

Looking ahead, 48 Gb DDR5 ICs, powered by the 1δ/1d process technologies, are projected to arrive in 2027-2028. And for the most demanding applications, particularly in data centers, 64 Gb DDR5 devices are expected from 2030 onwards. These colossal chips will leverage the sub-10nm production nodes (0x/0y) with the groundbreaking 4F2 cell structures, paving the way for truly unprecedented memory capacities.

Data Centers Redefined: The Power of MRDIMMs

While client PCs prioritize a balance of speed and capacity, data centers operate on a different scale, demanding extreme bandwidth and massive scalability. Traditional Registered DIMMs (RDIMMs), while reliable, eventually hit bandwidth limitations. This is where Multiplexed Rank Dual Inline Memory Modules (MRDIMMs) enter the fray, representing a paradigm shift for server memory.

MRDIMMs work by effectively running two DDR memory ranks in a multiplexed mode. This is achieved through specialized components: a multiplexing registered clock driver (MRCD) and multiplexing data buffer (MDB) chips. The ingenious part? The CPU communicates with the MRDIMM at a high data rate (e.g., 8800 MT/s), while the individual memory chips on the module operate at half that speed (e.g., 4400 MT/s). This reduces the burden on the memory chips, lowering latency and power consumption while still delivering immense bandwidth to the CPU.

Intel’s Xeon 6 platform is the first to embrace this technology, supporting 1st-generation MRDIMMs at 8800 MT/s. This allows servers to achieve significantly higher memory bandwidth and capacity without requiring entirely new memory chip designs.

The Next Generation: Unlocking Exascale Performance

The real game-changer, however, will be 2nd Generation MRDIMMs. These are currently under development, promising even higher capacities (256 GB and above) and mind-boggling speeds of 12,800 MT/s. Such advancements are crucial for the next wave of AI, machine learning, and high-performance computing applications that demand incredible memory throughput.

Both AMD and Intel are heavily invested in this future. AMD's EPYC 'Venice' (2026) and 'Verano' (2027) CPUs are slated to support 2nd Gen MRDIMMs, as are Intel’s Xeon 'Diamond Rapids' and 'Coral Rapids' processors in the latter half of this decade. Each 2nd Gen MRDIMM will provide a staggering 102.4 GB/s of bandwidth. Imagine a server CPU with 16 memory channels – that configuration could achieve a peak bandwidth of 1.6 TB/s! This level of memory performance is essential for feeding the hungry processing units of future data centers. The DDR5 specification is even designed to support up to 17,600 MT/s in multiplexed mode, showcasing the immense headroom still available within this standard.

Beyond the Hype: Practical Considerations for Adopters

With all these exciting developments, how do you navigate the DDR5 landscape today and in the near future? It's about making informed choices based on your specific needs.

Choosing the Right DDR5 for You

- Latency vs. Speed: For everyday tasks and most gaming, a balance of speed and latency is ideal. While higher MT/s numbers look great on paper, a lower CL (CAS Latency) can often provide a more noticeable real-world responsiveness. AMD’s focus on 8ns absolute latency at 6400 MT/s is a prime example of this optimization for consumer experience.

- Capacity Needs: While 16 GB or 32 GB is sufficient for most users, content creators, engineers, and power users will benefit immensely from higher capacities. With 64 GB, 128 GB, and even 256 GB modules becoming more prevalent, future-proofing your system with ample RAM is easier than ever. Consider your typical workload: running multiple virtual machines, editing 8K video, or compiling large codebases will undoubtedly benefit from more RAM.

Compatibility Check: Not All DDR5 is Created Equal

- Platform Support: Ensure your CPU and motherboard explicitly support the DDR5 speeds and features you're considering. While mainstream motherboards support standard DDR5, features like CUDIMM are currently more prevalent on Intel platforms, with AMD catching up in 2026. Always check your motherboard's Qualified Vendor List (QVL) for specific memory module compatibility.

- Module Type: For consumer systems, you’ll typically be looking at unbuffered DIMMs. For extreme speeds on compatible platforms, CUDIMMs will become an option. For servers, RDIMMs are common, but MRDIMMs are the future for high-bandwidth applications.

Investment Horizon: DDR5's Longevity

One of the most reassuring aspects of DDR5 is its long roadmap. With DDR6 not expected for consumer platforms until late 2029 or even 2030, investing in a robust DDR5 setup now means you’re well-equipped for the foreseeable future. The continuous improvements in speed and capacity mean that early DDR5 adopter systems will still benefit from new, faster modules as they become available. You won't be staring down obsolescence just a year or two after your purchase.

Common Questions & Memory Myths Debunked

Navigating the world of memory can raise a lot of questions. Let's tackle some common ones.

- Is DDR5 worth it over DDR4 today?

For a new build, absolutely. While initial DDR5 prices were high, they've stabilized. The performance uplift, especially with modern CPUs and high-refresh-rate gaming, is significant. More importantly, it provides a much longer upgrade path and access to future platform features that DDR4 simply can't offer. - Will DDR6 arrive soon and make DDR5 obsolete?

No, not soon. DDR6 is still years away from consumer adoption (late 2029-2030 at the earliest). DDR5 has a substantial lifespan ahead, continually improving with new process nodes and JEDEC specifications. It will be the dominant memory standard for the better part of this decade. - What's the real benefit of ultra-high clock speeds (e.g., 9600 MT/s vs. 6000 MT/s)?

For general productivity, the difference might be subtle. However, for memory-intensive tasks like video editing, scientific simulations, or high-refresh-rate gaming, higher speeds translate directly to reduced load times, smoother frame rates, and faster processing. It allows the CPU to access data quicker, preventing bottlenecks. The advent of CUDIMMs will further enhance the stability and real-world applicability of these extreme speeds. - Are higher capacity DDR5 modules (e.g., 64 GB) becoming more affordable?

Yes, as process technologies mature and production volumes increase, the cost per gigabyte of DDR5 has been steadily decreasing. The move to 32 Gb and 48 Gb ICs will further drive down the cost of high-capacity modules, making 64 GB and 128 GB more accessible to power users.

What's Next on the Horizon: The Road to DDR6 and Beyond

DDR5's journey is far from over. It will remain the dominant memory technology until at least the late 2020s, continuously evolving and adapting to the insatiable demands of computing. This extended lifespan is fueled by several key factors: the relentless advancement of production nodes, the introduction of ultra-fast CUDIMMs for client PCs, and the transformative potential of high-bandwidth MRDIMMs for next-generation data center platforms.

The innovations we're seeing, like the shift to the 4F2 cell structure in sub-10nm nodes, are not just incremental steps; they are foundational changes that will carry us into future memory generations, including DDR6 and beyond. This continuous cycle of improvement means that memory will always be a dynamic and exciting field.

So, as you look towards your next system upgrade or consider the infrastructure for future AI workloads, rest assured: DDR5 is not just keeping pace; it's leading the charge, setting the stage for an unprecedented era of computing performance. The future of memory isn't just fast; it's smart, efficient, and incredibly expansive.